PyTorch: https://github.com/shanglianlm0525/PyTorch-Networks

|

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

|

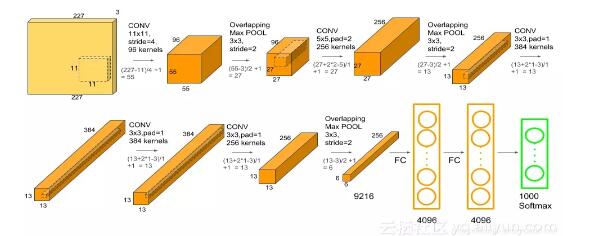

import torchimport torch.nn as nnimport torchvisionclass AlexNet(nn.Module): def __init__(self,num_classes=1000): super(AlexNet,self).__init__() self.feature_extraction = nn.Sequential( nn.Conv2d(in_channels=3,out_channels=96,kernel_size=11,stride=4,padding=2,bias=False), nn.ReLU(inplace=True), nn.MaxPool2d(kernel_size=3,stride=2,padding=0), nn.Conv2d(in_channels=96,out_channels=192,kernel_size=5,stride=1,padding=2,bias=False), nn.ReLU(inplace=True), nn.MaxPool2d(kernel_size=3,stride=2,padding=0), nn.Conv2d(in_channels=192,out_channels=384,kernel_size=3,stride=1,padding=1,bias=False), nn.ReLU(inplace=True), nn.Conv2d(in_channels=384,out_channels=256,kernel_size=3,stride=1,padding=1,bias=False), nn.ReLU(inplace=True), nn.Conv2d(in_channels=256,out_channels=256,kernel_size=3,stride=1,padding=1,bias=False), nn.ReLU(inplace=True), nn.MaxPool2d(kernel_size=3, stride=2, padding=0), ) self.classifier = nn.Sequential( nn.Dropout(p=0.5), nn.Linear(in_features=256*6*6,out_features=4096), nn.ReLU(inplace=True), nn.Dropout(p=0.5), nn.Linear(in_features=4096, out_features=4096), nn.ReLU(inplace=True), nn.Linear(in_features=4096, out_features=num_classes), ) def forward(self,x): x = self.feature_extraction(x) x = x.view(x.size(0),256*6*6) x = self.classifier(x) return xif __name__ =='__main__': # model = torchvision.models.AlexNet() model = AlexNet() print(model) input = torch.randn(8,3,224,224) out = model(input) print(out.shape) |

以上这篇PyTorch实现AlexNet示例就是小编分享给大家的全部内容了,希望能给大家一个参考,也希望大家多多支持服务器之家。

原文链接:https://blog.csdn.net/shanglianlm/article/details/86424857