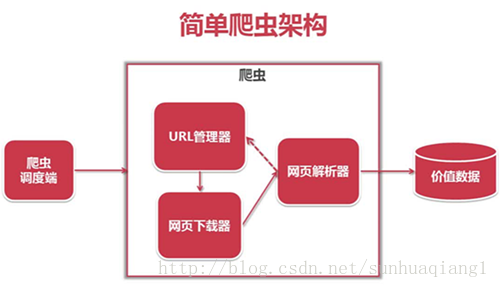

本篇博文主要讲解python爬虫实例,重点包括爬虫技术架构,组成爬虫的关键模块:url管理器、html下载器和html解析器。

爬虫简单架构

程序入口函数(爬虫调度段)

|

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

|

#coding:utf8import time, datetimefrom maya_spider import url_manager, html_downloader, html_parser, html_outputerclass spider_main(object): #初始化操作 def __init__(self): #设置url管理器 self.urls = url_manager.urlmanager() #设置html下载器 self.downloader = html_downloader.htmldownloader() #设置html解析器 self.parser = html_parser.htmlparser() #设置html输出器 self.outputer = html_outputer.htmloutputer() #爬虫调度程序 def craw(self, root_url): count = 1 self.urls.add_new_url(root_url) while self.urls.has_new_url(): try: new_url = self.urls.get_new_url() print('craw %d : %s' % (count, new_url)) html_content = self.downloader.download(new_url) new_urls, new_data = self.parser.parse(new_url, html_content) self.urls.add_new_urls(new_urls) self.outputer.collect_data(new_data) if count == 10: break count = count + 1 except: print('craw failed') self.outputer.output_html()if __name__ == '__main__': #设置爬虫入口 root_url = 'http://baike.baidu.com/view/21087.htm' #开始时间 print('开始计时..............') start_time = datetime.datetime.now() obj_spider = spider_main() obj_spider.craw(root_url) #结束时间 end_time = datetime.datetime.now() print('总用时:%ds'% (end_time - start_time).seconds) |

url管理器

|

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

|

class urlmanager(object): def __init__(self): self.new_urls = set() self.old_urls = set() def add_new_url(self, url): if url is none: return if url not in self.new_urls and url not in self.old_urls: self.new_urls.add(url) def add_new_urls(self, urls): if urls is none or len(urls) == 0: return for url in urls: self.add_new_url(url) def has_new_url(self): return len(self.new_urls) != 0 def get_new_url(self): new_url = self.new_urls.pop() self.old_urls.add(new_url) return new_url |

网页下载器

|

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

|

import urllibimport urllib.requestclass htmldownloader(object): def download(self, url): if url is none: return none #伪装成浏览器访问,直接访问的话csdn会拒绝 user_agent = 'mozilla/4.0 (compatible; msie 5.5; windows nt)' headers = {'user-agent':user_agent} #构造请求 req = urllib.request.request(url,headers=headers) #访问页面 response = urllib.request.urlopen(req) #python3中urllib.read返回的是bytes对象,不是string,得把它转换成string对象,用bytes.decode方法 return response.read().decode() |

网页解析器

|

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

|

import reimport urllibfrom urllib.parse import urlparsefrom bs4 import beautifulsoupclass htmlparser(object): def _get_new_urls(self, page_url, soup): new_urls = set() #/view/123.htm links = soup.find_all('a', href=re.compile(r'/item/.*?')) for link in links: new_url = link['href'] new_full_url = urllib.parse.urljoin(page_url, new_url) new_urls.add(new_full_url) return new_urls #获取标题、摘要 def _get_new_data(self, page_url, soup): #新建字典 res_data = {} #url res_data['url'] = page_url #<dd class="lemmawgt-lemmatitle-title"><h1>python</h1>获得标题标签 title_node = soup.find('dd', class_="lemmawgt-lemmatitle-title").find('h1') print(str(title_node.get_text())) res_data['title'] = str(title_node.get_text()) #<div class="lemma-summary" label-module="lemmasummary"> summary_node = soup.find('div', class_="lemma-summary") res_data['summary'] = summary_node.get_text() return res_data def parse(self, page_url, html_content): if page_url is none or html_content is none: return none soup = beautifulsoup(html_content, 'html.parser', from_encoding='utf-8') new_urls = self._get_new_urls(page_url, soup) new_data = self._get_new_data(page_url, soup) return new_urls, new_data |

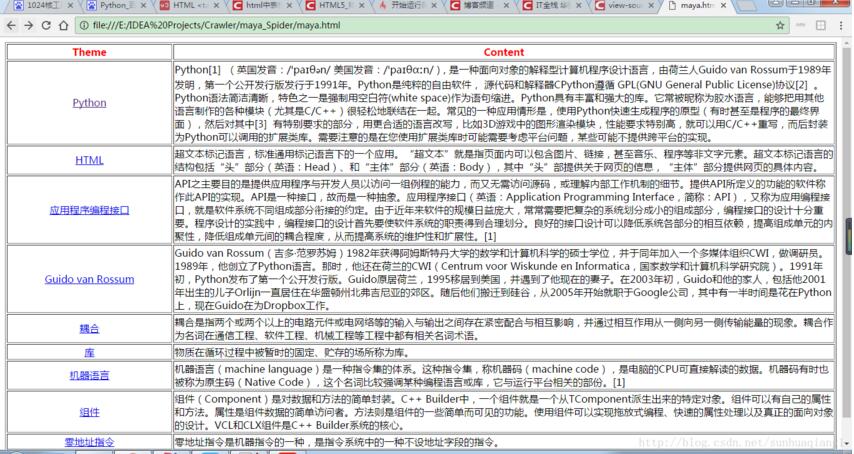

网页输出器

|

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

|

class htmloutputer(object): def __init__(self): self.datas = [] def collect_data(self, data): if data is none: return self.datas.append(data ) def output_html(self): fout = open('maya.html', 'w', encoding='utf-8') fout.write("<head><meta http-equiv='content-type' content='text/html;charset=utf-8'></head>") fout.write('<html>') fout.write('<body>') fout.write('<table border="1">') # <th width="5%">url</th> fout.write('''<tr style="color:red" width="90%"> <th>theme</th> <th width="80%">content</th> </tr>''') for data in self.datas: fout.write('<tr>\n') # fout.write('\t<td>%s</td>' % data['url']) fout.write('\t<td align="center"><a href=\'%s\'>%s</td>' % (data['url'], data['title'])) fout.write('\t<td>%s</td>\n' % data['summary']) fout.write('</tr>\n') fout.write('</table>') fout.write('</body>') fout.write('</html>') fout.close() |

运行结果

附:完整代码

以上就是本文的全部内容,希望对大家的学习有所帮助,也希望大家多多支持服务器之家。

原文链接:https://blog.csdn.net/sunhuaqiang1/article/details/66472363