tf-idf

前言

前段时间,又具体看了自己以前整理的tf-idf,这里把它发布在博客上,知识就是需要不断的重复的,否则就感觉生疏了。

tf-idf理解

tf-idf(term frequency–inverse document frequency)是一种用于资讯检索与资讯探勘的常用加权技术, tfidf的主要思想是:如果某个词或短语在一篇文章中出现的频率tf高,并且在其他文章中很少出现,则认为此词或者短语具有很好的类别区分能力,适合用来分类。tfidf实际上是:tf * idf,tf词频(term frequency),idf反文档频率(inverse document frequency)。tf表示词条在文档d中出现的频率。idf的主要思想是:如果包含词条t的文档越少,也就是n越小,idf越大,则说明词条t具有很好的类别区分能力。如果某一类文档c中包含词条t的文档数为m,而其它类包含t的文档总数为k,显然所有包含t的文档数n=m + k,当m大的时候,n也大,按照idf公式得到的idf的值会小,就说明该词条t类别区分能力不强。但是实际上,如果一个词条在一个类的文档中频繁出现,则说明该词条能够很好代表这个类的文本的特征,这样的词条应该给它们赋予较高的权重,并选来作为该类文本的特征词以区别与其它类文档。这就是idf的不足之处.

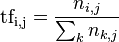

tf公式:

以上式子中 是该词在文件

是该词在文件 中的出现次数,而分母则是在文件

中的出现次数,而分母则是在文件 中所有字词的出现次数之和。

中所有字词的出现次数之和。

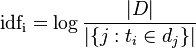

idf公式:

|d|:语料库中的文件总数

:包含词语 ti 的文件数目(即 ni,j不等于0的文件数目)如果该词语不在语料库中,就会导致被除数为零,因此一般情况下使用

:包含词语 ti 的文件数目(即 ni,j不等于0的文件数目)如果该词语不在语料库中,就会导致被除数为零,因此一般情况下使用

然后

tf-idf实现(java)

这里采用了外部插件ikanalyzer-2012.jar,用其进行分词

具体代码如下:

|

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73

74

75

76

77

78

79

80

81

82

83

84

85

86

87

88

89

90

91

92

93

94

95

96

97

98

99

100

101

102

103

104

105

106

107

108

109

110

111

112

113

114

115

116

117

118

119

120

121

122

123

124

125

126

127

128

129

130

131

132

133

134

135

136

137

138

139

140

141

142

143

144

145

146

147

148

149

150

151

152

153

154

155

156

157

158

159

160

161

162

163

164

165

166

167

168

169

170

171

172

173

174

175

176

177

178

179

180

181

182

183

184

185

186

187

188

189

190

|

package tfidf;import java.io.*;import java.util.*;import org.wltea.analyzer.lucene.ikanalyzer;public class readfiles { /** * @param args */ private static arraylist<string> filelist = new arraylist<string>(); // the list of file //get list of file for the directory, including sub-directory of it public static list<string> readdirs(string filepath) throws filenotfoundexception, ioexception { try { file file = new file(filepath); if(!file.isdirectory()) { system.out.println("输入的[]"); system.out.println("filepath:" + file.getabsolutepath()); } else { string[] flist = file.list(); for (int i = 0; i < flist.length; i++) { file newfile = new file(filepath + "\\" + flist[i]); if(!newfile.isdirectory()) { filelist.add(newfile.getabsolutepath()); } else if(newfile.isdirectory()) //if file is a directory, call readdirs { readdirs(filepath + "\\" + flist[i]); } } } } catch(filenotfoundexception e) { system.out.println(e.getmessage()); } return filelist; } //read file public static string readfile(string file) throws filenotfoundexception, ioexception { stringbuffer strsb = new stringbuffer(); //string is constant, stringbuffer can be changed. inputstreamreader instrr = new inputstreamreader(new fileinputstream(file), "gbk"); //byte streams to character streams bufferedreader br = new bufferedreader(instrr); string line = br.readline(); while(line != null){ strsb.append(line).append("\r\n"); line = br.readline(); } return strsb.tostring(); } //word segmentation public static arraylist<string> cutwords(string file) throws ioexception{ arraylist<string> words = new arraylist<string>(); string text = readfiles.readfile(file); ikanalyzer analyzer = new ikanalyzer(); words = analyzer.split(text); return words; } //term frequency in a file, times for each word public static hashmap<string, integer> normaltf(arraylist<string> cutwords){ hashmap<string, integer> restf = new hashmap<string, integer>(); for (string word : cutwords){ if(restf.get(word) == null){ restf.put(word, 1); system.out.println(word); } else{ restf.put(word, restf.get(word) + 1); system.out.println(word.tostring()); } } return restf; } //term frequency in a file, frequency of each word public static hashmap<string, float> tf(arraylist<string> cutwords){ hashmap<string, float> restf = new hashmap<string, float>(); int wordlen = cutwords.size(); hashmap<string, integer> inttf = readfiles.normaltf(cutwords); iterator iter = inttf.entryset().iterator(); //iterator for that get from tf while(iter.hasnext()){ map.entry entry = (map.entry)iter.next(); restf.put(entry.getkey().tostring(), float.parsefloat(entry.getvalue().tostring()) / wordlen); system.out.println(entry.getkey().tostring() + " = "+ float.parsefloat(entry.getvalue().tostring()) / wordlen); } return restf; } //tf times for file public static hashmap<string, hashmap<string, integer>> normaltfallfiles(string dirc) throws ioexception{ hashmap<string, hashmap<string, integer>> allnormaltf = new hashmap<string, hashmap<string,integer>>(); list<string> filelist = readfiles.readdirs(dirc); for (string file : filelist){ hashmap<string, integer> dict = new hashmap<string, integer>(); arraylist<string> cutwords = readfiles.cutwords(file); //get cut word for one file dict = readfiles.normaltf(cutwords); allnormaltf.put(file, dict); } return allnormaltf; } //tf for all file public static hashmap<string,hashmap<string, float>> tfallfiles(string dirc) throws ioexception{ hashmap<string, hashmap<string, float>> alltf = new hashmap<string, hashmap<string, float>>(); list<string> filelist = readfiles.readdirs(dirc); for (string file : filelist){ hashmap<string, float> dict = new hashmap<string, float>(); arraylist<string> cutwords = readfiles.cutwords(file); //get cut words for one file dict = readfiles.tf(cutwords); alltf.put(file, dict); } return alltf; } public static hashmap<string, float> idf(hashmap<string,hashmap<string, float>> all_tf){ hashmap<string, float> residf = new hashmap<string, float>(); hashmap<string, integer> dict = new hashmap<string, integer>(); int docnum = filelist.size(); for (int i = 0; i < docnum; i++){ hashmap<string, float> temp = all_tf.get(filelist.get(i)); iterator iter = temp.entryset().iterator(); while(iter.hasnext()){ map.entry entry = (map.entry)iter.next(); string word = entry.getkey().tostring(); if(dict.get(word) == null){ dict.put(word, 1); } else { dict.put(word, dict.get(word) + 1); } } } system.out.println("idf for every word is:"); iterator iter_dict = dict.entryset().iterator(); while(iter_dict.hasnext()){ map.entry entry = (map.entry)iter_dict.next(); float value = (float)math.log(docnum / float.parsefloat(entry.getvalue().tostring())); residf.put(entry.getkey().tostring(), value); system.out.println(entry.getkey().tostring() + " = " + value); } return residf; } public static void tf_idf(hashmap<string,hashmap<string, float>> all_tf,hashmap<string, float> idfs){ hashmap<string, hashmap<string, float>> restfidf = new hashmap<string, hashmap<string, float>>(); int docnum = filelist.size(); for (int i = 0; i < docnum; i++){ string filepath = filelist.get(i); hashmap<string, float> tfidf = new hashmap<string, float>(); hashmap<string, float> temp = all_tf.get(filepath); iterator iter = temp.entryset().iterator(); while(iter.hasnext()){ map.entry entry = (map.entry)iter.next(); string word = entry.getkey().tostring(); float value = (float)float.parsefloat(entry.getvalue().tostring()) * idfs.get(word); tfidf.put(word, value); } restfidf.put(filepath, tfidf); } system.out.println("tf-idf for every file is :"); distfidf(restfidf); } public static void distfidf(hashmap<string, hashmap<string, float>> tfidf){ iterator iter1 = tfidf.entryset().iterator(); while(iter1.hasnext()){ map.entry entrys = (map.entry)iter1.next(); system.out.println("filename: " + entrys.getkey().tostring()); system.out.print("{"); hashmap<string, float> temp = (hashmap<string, float>) entrys.getvalue(); iterator iter2 = temp.entryset().iterator(); while(iter2.hasnext()){ map.entry entry = (map.entry)iter2.next(); system.out.print(entry.getkey().tostring() + " = " + entry.getvalue().tostring() + ", "); } system.out.println("}"); } } public static void main(string[] args) throws ioexception { // todo auto-generated method stub string file = "d:/testfiles"; hashmap<string,hashmap<string, float>> all_tf = tfallfiles(file); system.out.println(); hashmap<string, float> idfs = idf(all_tf); system.out.println(); tf_idf(all_tf, idfs); }} |

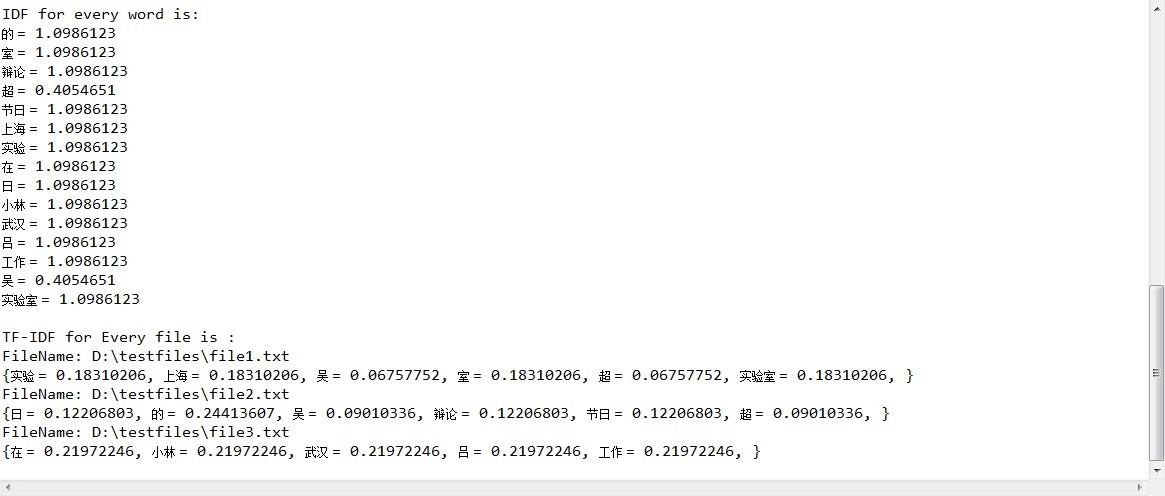

结果如下图:

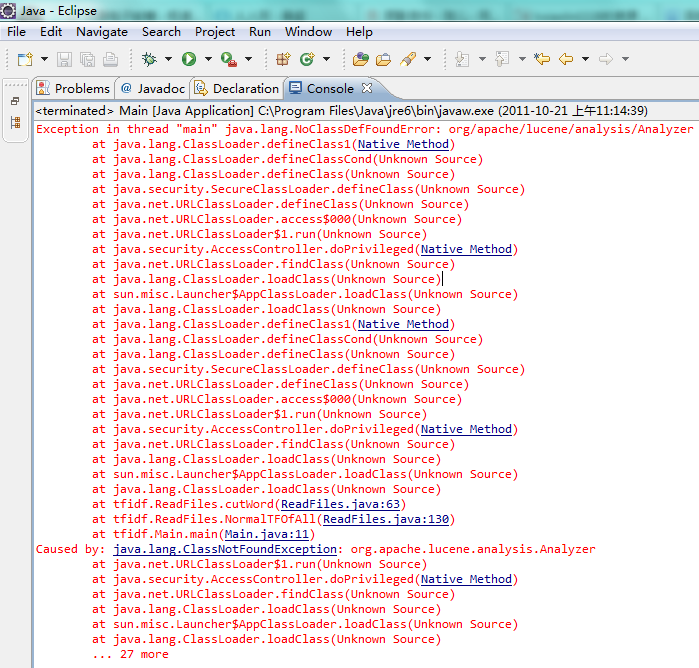

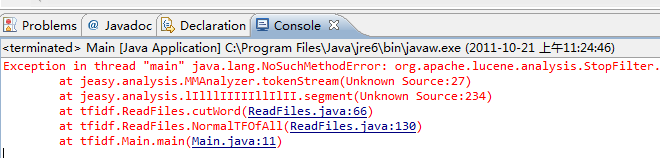

常见问题

没有加入lucene jar包

lucene包和je包版本不适合

总结

以上就是本文关于tf-idf理解及其java实现代码实例的全部内容,希望对大家有所帮助。如有不足之处,欢迎留言指出。

原文链接:https://www.cnblogs.com/ywl925/archive/2013/08/26/3275878.html