利用python批量爬取百度任意类别的图片时:

(1):设置类别名字。

(2):设置类别的数目,即每一类别的的图片数量。

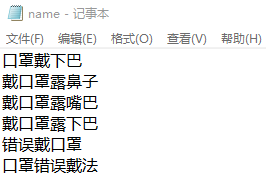

(3):编辑一个txt文件,命名为name.txt,在txt文件中输入类别,此类别即为关键字。并将txt文件与python源代码放在同一个目录下。

python源代码:

|

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73

74

75

76

77

78

79

80

81

82

83

84

85

86

87

88

89

90

91

92

93

94

95

96

97

98

99

100

101

102

103

104

105

106

107

108

109

110

111

112

113

114

115

116

117

118

119

120

121

122

123

|

# -*- coding: utf-8 -*-"""Created on Sun Sep 13 21:35:34 2020@author: ydc"""import reimport requestsfrom urllib import errorfrom bs4 import BeautifulSoupimport osnum = 0numPicture = 0file = ''List = []def Find(url, A): global List print('正在检测图片总数,请稍等.....') t = 0 i = 1 s = 0 while t < 1000: Url = url + str(t) try: # 这里搞了下 Result = A.get(Url, timeout=7, allow_redirects=False) except BaseException: t = t + 60 continue else: result = Result.text pic_url = re.findall('"objURL":"(.*?)",', result, re.S) # 先利用正则表达式找到图片url s += len(pic_url) if len(pic_url) == 0: break else: List.append(pic_url) t = t + 60 return sdef recommend(url): Re = [] try: html = requests.get(url, allow_redirects=False) except error.HTTPError as e: return else: html.encoding = 'utf-8' bsObj = BeautifulSoup(html.text, 'html.parser') div = bsObj.find('div', id='topRS') if div is not None: listA = div.findAll('a') for i in listA: if i is not None: Re.append(i.get_text()) return Redef dowmloadPicture(html, keyword): global num # t =0 pic_url = re.findall('"objURL":"(.*?)",', html, re.S) # 先利用正则表达式找到图片url print('找到关键词:' + keyword + '的图片,即将开始下载图片...') for each in pic_url: print('正在下载第' + str(num + 1) + '张图片,图片地址:' + str(each)) try: if each is not None: pic = requests.get(each, timeout=7) else: continue except BaseException: print('错误,当前图片无法下载') continue else: string = file + r'\\' + keyword + '_' + str(num) + '.jpg' fp = open(string, 'wb') fp.write(pic.content) fp.close() num += 1 if num >= numPicture: returnif __name__ == '__main__': # 主函数入口 headers = { 'Accept-Language': 'zh-CN,zh;q=0.8,zh-TW;q=0.7,zh-HK;q=0.5,en-US;q=0.3,en;q=0.2', 'Connection': 'keep-alive', 'User-Agent': 'Mozilla/5.0 (X11; Linux x86_64; rv:60.0) Gecko/20100101 Firefox/60.0', 'Upgrade-Insecure-Requests': '1' } A = requests.Session() A.headers = headers ############################### tm = int(input('请输入每类图片的下载数量 ')) numPicture = tm line_list = [] with open('./name.txt', encoding='utf-8') as file: line_list = [k.strip() for k in file.readlines()] # 用 strip()移除末尾的空格 for word in line_list: url = 'https://image.baidu.com/search/flip?tn=baiduimage&ie=utf-8&word=' + word + '&pn=' tot = Find(url, A) Recommend = recommend(url) # 记录相关推荐 print('经过检测%s类图片共有%d张' % (word, tot)) file = word + '文件' y = os.path.exists(file) if y == 1: print('该文件已存在,请重新输入') file = word + '文件夹2' os.mkdir(file) else: os.mkdir(file) t = 0 tmp = url while t < numPicture: try: url = tmp + str(t) # result = requests.get(url, timeout=10) # 这里搞了下 result = A.get(url, timeout=10, allow_redirects=False) print(url) except error.HTTPError as e: print('网络错误,请调整网络后重试') t = t + 60 else: dowmloadPicture(result.text, word) t = t + 60 # numPicture = numPicture + tm print('当前搜索结束,感谢使用') |

到此这篇关于利用python批量爬取百度任意类别的图片的实现方法的文章就介绍到这了,更多相关python批量爬取百度图片内容请搜索服务器之家以前的文章或继续浏览下面的相关文章希望大家以后多多支持服务器之家!

原文链接:https://blog.csdn.net/qq_42612717/article/details/108922563