1、Python中获取整个页面的代码:

|

1

2

3

4

|

import requestsres = requests.get('https://blog.csdn.net/yirexiao/article/details/79092355')res.encoding = 'utf-8'print(res.text) |

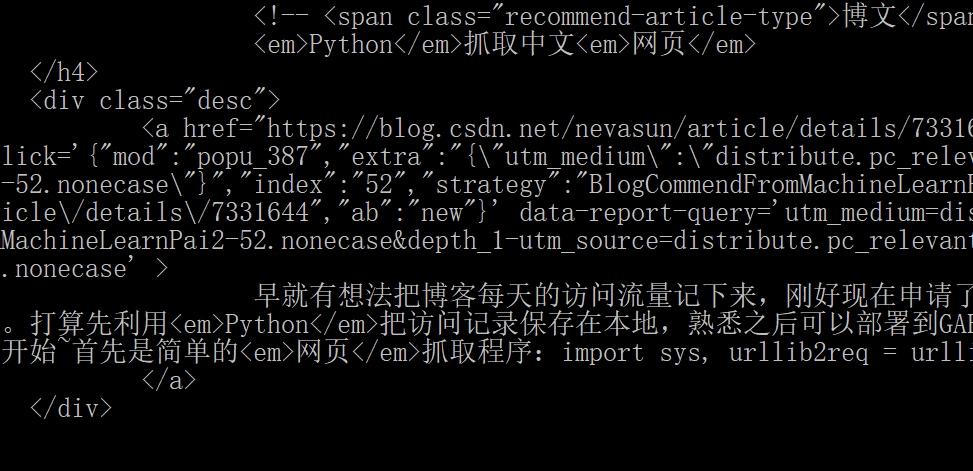

2、运行结果

实例扩展:

|

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

|

from bs4 import BeautifulSoupimport time,re,urllib2t=time.time()websiteurls={}def scanpage(url): websiteurl=url t=time.time() n=0 html=urllib2.urlopen(websiteurl).read() soup=BeautifulSoup(html) pageurls=[] Upageurls={} pageurls=soup.find_all("a",href=True) for links in pageurls: if websiteurl in links.get("href") and links.get("href") not in Upageurls and links.get("href") not in websiteurls: Upageurls[links.get("href")]=0 for links in Upageurls.keys(): try: urllib2.urlopen(links).getcode() except: print "connect failed" else: t2=time.time() Upageurls[links]=urllib2.urlopen(links).getcode() print n, print links, print Upageurls[links] t1=time.time() print t1-t2 n+=1 print ("total is "+repr(n)+" links") print time.time()-tscanpage(<a href="http://news.163.com/" rel="external nofollow">http://news.163.com/</a>) |

到此这篇关于python获取整个网页源码的方法的文章就介绍到这了,更多相关python如何获取整个页面内容请搜索服务器之家以前的文章或继续浏览下面的相关文章希望大家以后多多支持服务器之家!

原文链接:https://www.py.cn/faq/python/18406.html