对于网络,我一直处于好奇的态度。以前一直想着写个爬虫,但是一拖再拖,懒得实现,感觉这是一个很麻烦的事情,出现个小错误,就要调试很多时间,太浪费时间。

后来一想,既然早早给自己下了保证,就先实现它吧,从简单开始,慢慢增加功能,有时间就实现一个,并且随时优化代码。

下面是我简单实现爬取指定网页,并且保存的简单实现,其实有几种方式可以实现,这里慢慢添加该功能的几种实现方式。

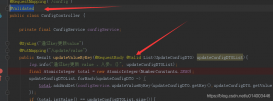

UrlConnection爬取实现

|

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73

74

75

76

77

78

79

80

81

82

83

84

85

86

87

88

|

package html;import java.io.BufferedReader;import java.io.FileOutputStream;import java.io.FileWriter;import java.io.IOException;import java.io.InputStream;import java.io.InputStreamReader;import java.io.OutputStreamWriter;import java.net.MalformedURLException;import java.net.URL;import java.net.URLConnection;public class Spider { public static void main(String[] args) { String filepath = "d:/124.html"; String url_str = "http://www.hao123.com/"; URL url = null; try { url = new URL(url_str); } catch (MalformedURLException e) { e.printStackTrace(); } String charset = "utf-8"; int sec_cont = 1000; try { URLConnection url_con = url.openConnection(); url_con.setDoOutput(true); url_con.setReadTimeout(10 * sec_cont); url_con.setRequestProperty("User-Agent", "Mozilla/4.0 (compatible; MSIE 7.0; Windows NT 5.1)"); InputStream htm_in = url_con.getInputStream(); String htm_str = InputStream2String(htm_in,charset); saveHtml(filepath,htm_str); } catch (IOException e) { e.printStackTrace(); } } /** * Method: saveHtml * Description: save String to file * @param filepath * file path which need to be saved * @param str * string saved */ public static void saveHtml(String filepath, String str){ try { /*@SuppressWarnings("resource") FileWriter fw = new FileWriter(filepath); fw.write(str); fw.flush();*/ OutputStreamWriter outs = new OutputStreamWriter(new FileOutputStream(filepath, true), "utf-8"); outs.write(str); System.out.print(str); outs.close(); } catch (IOException e) { System.out.println("Error at save html..."); e.printStackTrace(); } } /** * Method: InputStream2String * Description: make InputStream to String * @param in_st * inputstream which need to be converted * @param charset * encoder of value * @throws IOException * if an error occurred */ public static String InputStream2String(InputStream in_st,String charset) throws IOException{ BufferedReader buff = new BufferedReader(new InputStreamReader(in_st, charset)); StringBuffer res = new StringBuffer(); String line = ""; while((line = buff.readLine()) != null){ res.append(line); } return res.toString(); }} |

实现过程中,爬取的网页的中文乱码问题,是个比较麻烦的事情。

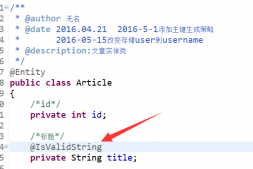

HttpClient爬取实现

HttpClient实现爬取网页时,遇到了很多问题。其一,就是存在两个版本的HttpClient,一个是sun内置的,另一个是apache开源的一个项目,似乎sun内置用的不太多,我也就没有实现,而是采用了apache开源项目(以后说的HttpClient都是指apache的开源版本);其二,在使用HttpClient时,最新的版本已经不同于以前的版本,从HttpClient4.x版本后,导入的包就已经不一样了,从网上找的很多部分都是HttpClient3.x版本的,所以如果使用最新的版本,还是看帮助文件为好。

我用的是Eclipse,需要配置环境导入引用包。

首先,下载HttpClient,地址是:http://hc.apache.org/downloads.cgi,我是用的事HttpClient4.2版本。

然后,解压缩,找到了/lib文件夹下的commons-codec-1.6.jar,commons-logging-1.1.1.jar,httpclient-4.2.5.jar,httpcore-4.2.4.jar(版本号根据下载的版本有所不同,还有其他的jar文件,我这里暂时用不到,所以先导入必须的);

最后,将上面的jar文件,加入classpath中,即右击工程文件 => Bulid Path => Configure Build Path => Add External Jar..,然后添加上面的包就可以了。

还用一种方法就是讲上面的包,直接复制到工程文件夹下的lib文件夹中。

下面是实现代码:

|

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73

74

75

76

77

78

79

80

81

|

package html;import java.io.BufferedReader;import java.io.FileOutputStream;import java.io.IOException;import java.io.InputStream;import java.io.InputStreamReader;import java.io.OutputStreamWriter;import org.apache.http.HttpEntity;import org.apache.http.HttpResponse;import org.apache.http.client.*;import org.apache.http.client.methods.HttpGet;import org.apache.http.impl.client.DefaultHttpClient;public class SpiderHttpClient { public static void main(String[] args) throws Exception { // TODO Auto-generated method stub String url_str = "http://www.hao123.com"; String charset = "utf-8"; String filepath = "d:/125.html"; HttpClient hc = new DefaultHttpClient(); HttpGet hg = new HttpGet(url_str); HttpResponse response = hc.execute(hg); HttpEntity entity = response.getEntity(); InputStream htm_in = null; if(entity != null){ System.out.println(entity.getContentLength()); htm_in = entity.getContent(); String htm_str = InputStream2String(htm_in,charset); saveHtml(filepath,htm_str); } } /** * Method: saveHtml * Description: save String to file * @param filepath * file path which need to be saved * @param str * string saved */ public static void saveHtml(String filepath, String str){ try { /*@SuppressWarnings("resource") FileWriter fw = new FileWriter(filepath); fw.write(str); fw.flush();*/ OutputStreamWriter outs = new OutputStreamWriter(new FileOutputStream(filepath, true), "utf-8"); outs.write(str); outs.close(); } catch (IOException e) { System.out.println("Error at save html..."); e.printStackTrace(); } } /** * Method: InputStream2String * Description: make InputStream to String * @param in_st * inputstream which need to be converted * @param charset * encoder of value * @throws IOException * if an error occurred */ public static String InputStream2String(InputStream in_st,String charset) throws IOException{ BufferedReader buff = new BufferedReader(new InputStreamReader(in_st, charset)); StringBuffer res = new StringBuffer(); String line = ""; while((line = buff.readLine()) != null){ res.append(line); } return res.toString(); }} |

以上就是本文的全部内容,希望对大家的学习有所帮助,也希望大家多多支持服务器之家。

原文链接:http://www.cnblogs.com/ywl925/archive/2013/08/20/3270875.html