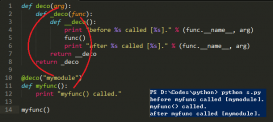

本文实例讲述了python采集百度百科的方法。分享给大家供大家参考。具体如下:

|

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

|

#!/usr/bin/python# -*- coding: utf-8 -*-#encoding=utf-8 #Filename:get_baike.pyimport urllib2,reimport sysdef getHtml(url,time=10): response = urllib2.urlopen(url,timeout=time) html = response.read() response.close() return htmldef clearBlank(html): if len(html) == 0 : return '' html = re.sub('\r|\n|\t','',html) while html.find(" ")!=-1 or html.find(' ')!=-1 : html = html.replace(' ',' ').replace(' ',' ') return htmlif __name__ == '__main__': html = getHtml('http://baike.baidu.com/view/4617031.htm',10) html = html.decode('gb2312','replace').encode('utf-8') #转码 title_reg = r'<h1 class="title" id="[\d]+">(.*?)</h1>' content_reg = r'<div class="card-summary-content">(.*?)</p>' title = re.compile(title_reg).findall(html) content = re.compile(content_reg).findall(html) title[0] = re.sub(r'<[^>]*?>', '', title[0]) content[0] = re.sub(r'<[^>]*?>', '', content[0]) print title[0] print '#######################' print content[0] |

希望本文所述对大家的Python程序设计有所帮助。