mongodb的多服务器配置,以前写过一篇文章,是master-slave模式的,请参考:详解mongodb 主从配置。master-slave模式,不能自动实现故障转移和恢复。所以推荐大家使用mongodb的replica set,来实现多服务器的高可用。给我的感觉是replica set好像自带了heartbeat功能,挺强大的。

一,三台服务器,1主,2从

服务器1:127.0.0.1:27017

服务器2:127.0.0.1:27018

服务器3:127.0.0.1:27019

1,创建数据库目录

|

1

|

[root@localhost ~]# mkdir /var/lib/{mongodb_2,mongodb_3} |

在一台机子上面模拟,三台服务器,所以把DB目录分开了。

2,创建配置文件

|

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

|

[root@localhost ~]# cat /etc/mongodb.conf |awk '{if($0 !~ /^$/ && $0 !~ /^#/) {print $0}}' //主服务器配置 port = 27017 //监听端口 fork = true //后台运行 pidfilepath = /var/run/mongodb/mongodb.pid //进程PID文件 logpath = /var/log/mongodb/mongodb.log //日志文件 dbpath =/var/lib/mongodb //db存放目录 journal = true //存储模式 nohttpinterface = true //禁用http directoryperdb=true //一个数据库一个文件夹 logappend=true //追加方式写日志 replSet=repmore //集群名称,自定义 oplogSize=1000 //oplog大小 [root@localhost ~]# cat /etc/mongodb_2.conf |awk '{if($0 !~ /^$/ && $0 !~ /^#/) {print $0}}' //从服务器 port = 27018 fork = true pidfilepath = /var/run/mongodb/mongodb_2.pid logpath = /var/log/mongodb/mongodb_2.log dbpath =/var/lib/mongodb_2 journal = true nohttpinterface = true directoryperdb=true logappend=true replSet=repmore oplogSize=1000 [root@localhost ~]# cat /etc/mongodb_3.conf |awk '{if($0 !~ /^$/ && $0 !~ /^#/) {print $0}}' //从服务器 port = 27019 fork = true pidfilepath = /var/run/mongodb/mongodb_3.pid logpath = /var/log/mongodb/mongodb_3.log dbpath =/var/lib/mongodb_3 journal = true nohttpinterface = true oplogSize = 1000 directoryperdb=true logappend=true replSet=repmore |

在这里要注意一点,不要把认证开起来了,不然查看rs.status();时,主从服务器间,无法连接,"lastHeartbeatMessage" : "initial sync couldn't connect to 127.0.0.1:27017"

3,启动三台服务器

|

1

2

3

|

mongod -f /etc/mongodb.conf mongod -f /etc/mongodb_2.conf mongod -f /etc/mongodb_3.conf |

注意:初次启动时,主服务器比较快的,从服务器有点慢。

二,配置并初始化replica set

1,配置replica set节点

|

1

|

> config = {_id:"repmore",members:[{_id:0,host:'127.0.0.1:27017',priority :2},{_id:1,host:'127.0.0.1:27018',priority:1},{_id:2,host:'127.0.0.1:27019',priority:1}]} |

2,初始化replica set

|

1

2

3

4

5

|

> rs.initiate(config); { "info" : "Config now saved locally. Should come online in about a minute.", "ok" : 1 } |

3,查看replica set各节点状态

|

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

|

repmore:PRIMARY> rs.status(); { "set" : "repmore", "date" : ISODate("2013-12-16T21:01:51Z"), "myState" : 2, "syncingTo" : "127.0.0.1:27017", "members" : [ { "_id" : 0, "name" : "127.0.0.1:27017", "health" : 1, "state" : 1, "stateStr" : "PRIMARY", "uptime" : 33, "optime" : Timestamp(1387227638, 1), "optimeDate" : ISODate("2013-12-16T21:00:38Z"), "lastHeartbeat" : ISODate("2013-12-16T21:01:50Z"), "lastHeartbeatRecv" : ISODate("2013-12-16T21:01:50Z"), "pingMs" : 0, "syncingTo" : "127.0.0.1:27018" }, { "_id" : 1, "name" : "127.0.0.1:27018", "health" : 1, "state" : 2, "stateStr" : "SECONDARY", "uptime" : 1808, "optime" : Timestamp(1387227638, 1), "optimeDate" : ISODate("2013-12-16T21:00:38Z"), "errmsg" : "syncing to: 127.0.0.1:27017", "self" : true }, { "_id" : 2, "name" : "127.0.0.1:27019", "health" : 1, "state" : 2, "stateStr" : "SECONDARY", "uptime" : 1806, "optime" : Timestamp(1387227638, 1), "optimeDate" : ISODate("2013-12-16T21:00:38Z"), "lastHeartbeat" : ISODate("2013-12-16T21:01:50Z"), "lastHeartbeatRecv" : ISODate("2013-12-16T21:01:51Z"), "pingMs" : 0, "lastHeartbeatMessage" : "syncing to: 127.0.0.1:27018", "syncingTo" : "127.0.0.1:27018" } ], "ok" : 1 } |

在这里要注意,rs.initiate初始化也是要一定时间的,刚执行完rs.initiate,我就查看状态,从服务器的stateStr不是SECONDARY,而是stateStr" : "STARTUP2",等一会就好了。

三,replica set主,从测试

1,主服务器测试

|

1

2

3

4

5

6

7

|

repmore:PRIMARY> show dbs; local 1.078125GB repmore:PRIMARY> use testswitched to db testrepmore:PRIMARY> db.test.insert({'name':'tank','phone':'12345678'}); repmore:PRIMARY> db.test.find(); { "_id" : ObjectId("52af64549d2f9e75bc57cda7"), "name" : "tank", "phone" : "12345678" } |

2,从服务器测试

|

1

2

3

4

5

6

7

8

9

10

11

12

13

|

[root@localhost mongodb]# mongo 127.0.0.1:27018 //连接 MongoDB shell version: 2.4.6 connecting to: 127.0.0.1:27018/testrepmore:SECONDARY> show dbs; local 1.078125GB test 0.203125GB repmore:SECONDARY> db.test.find(); //无权限查看 error: { "$err" : "not master and slaveOk=false", "code" : 13435 } repmore:SECONDARY> rs.slaveOk(); //从库开启 repmore:SECONDARY> db.test.find(); //从库可看到主库刚插入的数据 { "_id" : ObjectId("52af64549d2f9e75bc57cda7"), "name" : "tank", "phone" : "12345678" } repmore:SECONDARY> db.test.insert({'name':'zhangying','phone':'12345678'}); //从库只读,无插入权限 not master |

到这儿,我们的replica set就配置好了。

四,故障测试

前面我说过,mongodb replica set有故障转移功能,下面就模拟一下,这个过程

1,故障转移

1.1,关闭主服务器

|

1

2

3

4

5

6

7

8

9

10

|

[root@localhost mongodb]# ps aux |grep mongod //查看所有的mongod root 16977 0.2 1.1 3153692 44464 ? Sl 04:31 0:02 mongod -f /etc/mongodb.conf root 17032 0.2 1.1 3128996 43640 ? Sl 04:31 0:02 mongod -f /etc/mongodb_2.conf root 17092 0.2 0.9 3127976 38324 ? Sl 04:31 0:02 mongod -f /etc/mongodb_3.conf root 20400 0.0 0.0 103248 860 pts/2 S+ 04:47 0:00 grep mongod [root@localhost mongodb]# kill 16977 //关闭主服务器进程 [root@localhost mongodb]# ps aux |grep mongod root 17032 0.2 1.1 3133124 43836 ? Sl 04:31 0:02 mongod -f /etc/mongodb_2.conf root 17092 0.2 0.9 3127976 38404 ? Sl 04:31 0:02 mongod -f /etc/mongodb_3.conf root 20488 0.0 0.0 103248 860 pts/2 S+ 04:47 0:00 grep mongod |

1.2,在主库执行命令

|

1

2

|

repmore:PRIMARY> show dbs; Tue Dec 17 04:48:02.392 DBClientCursor::init call() failed |

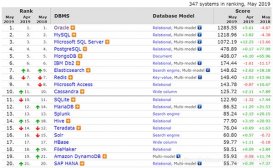

1.3,从库查看状态,如下图,

replica set 故障测试

以前的从库变主库了,故障转移成功

2,故障恢复

mongod -f /etc/mongodb.conf

启动刚被关闭的主服务器,然后在登录到主服务器,查看状态rs.status();已恢复到最原始的状态了。