学了几天终于大概明白pytorch怎么用了

这个是直接搬运的官方文档的代码

之后会自己试着实现其他nlp的任务

|

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73

74

75

76

77

78

79

80

81

82

83

84

85

86

87

88

89

90

91

92

93

94

95

96

97

98

99

100

101

102

103

104

105

106

107

108

109

110

111

112

113

114

115

116

117

118

119

120

121

122

123

124

125

126

127

128

129

130

131

132

133

134

135

136

137

138

139

140

141

142

143

144

|

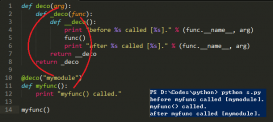

# Author: Robert Guthrieimport torchimport torch.autograd as autogradimport torch.nn as nnimport torch.nn.functional as Fimport torch.optim as optimtorch.manual_seed(1)lstm = nn.LSTM(3, 3) # Input dim is 3, output dim is 3inputs = [autograd.Variable(torch.randn((1, 3))) for _ in range(5)] # make a sequence of length 5# initialize the hidden state.hidden = (autograd.Variable(torch.randn(1, 1, 3)), autograd.Variable(torch.randn((1, 1, 3))))for i in inputs: # Step through the sequence one element at a time. # after each step, hidden contains the hidden state. out, hidden = lstm(i.view(1, 1, -1), hidden)# alternatively, we can do the entire sequence all at once.# the first value returned by LSTM is all of the hidden states throughout# the sequence. the second is just the most recent hidden state# (compare the last slice of "out" with "hidden" below, they are the same)# The reason for this is that:# "out" will give you access to all hidden states in the sequence# "hidden" will allow you to continue the sequence and backpropagate,# by passing it as an argument to the lstm at a later time# Add the extra 2nd dimensioninputs = torch.cat(inputs).view(len(inputs), 1, -1)hidden = (autograd.Variable(torch.randn(1, 1, 3)), autograd.Variable( torch.randn((1, 1, 3)))) # clean out hidden stateout, hidden = lstm(inputs, hidden)#print(out)#print(hidden)#准备数据def prepare_sequence(seq, to_ix): idxs = [to_ix[w] for w in seq] tensor = torch.LongTensor(idxs) return autograd.Variable(tensor)training_data = [ ("The dog ate the apple".split(), ["DET", "NN", "V", "DET", "NN"]), ("Everybody read that book".split(), ["NN", "V", "DET", "NN"])]word_to_ix = {}for sent, tags in training_data: for word in sent: if word not in word_to_ix: word_to_ix[word] = len(word_to_ix)print(word_to_ix)tag_to_ix = {"DET": 0, "NN": 1, "V": 2}# These will usually be more like 32 or 64 dimensional.# We will keep them small, so we can see how the weights change as we train.EMBEDDING_DIM = 6HIDDEN_DIM = 6#继承自nn.moduleclass LSTMTagger(nn.Module): def __init__(self, embedding_dim, hidden_dim, vocab_size, tagset_size): super(LSTMTagger, self).__init__() self.hidden_dim = hidden_dim #一个单词数量到embedding维数的矩阵 self.word_embeddings = nn.Embedding(vocab_size, embedding_dim) #传入两个维度参数 # The LSTM takes word embeddings as inputs, and outputs hidden states # with dimensionality hidden_dim. self.lstm = nn.LSTM(embedding_dim, hidden_dim) #线性layer从隐藏状态空间映射到tag便签 # The linear layer that maps from hidden state space to tag space self.hidden2tag = nn.Linear(hidden_dim, tagset_size) self.hidden = self.init_hidden() def init_hidden(self): # Before we've done anything, we dont have any hidden state. # Refer to the Pytorch documentation to see exactly # why they have this dimensionality. # The axes semantics are (num_layers, minibatch_size, hidden_dim) return (autograd.Variable(torch.zeros(1, 1, self.hidden_dim)), autograd.Variable(torch.zeros(1, 1, self.hidden_dim))) def forward(self, sentence): embeds = self.word_embeddings(sentence) lstm_out, self.hidden = self.lstm(embeds.view(len(sentence), 1, -1), self.hidden) tag_space = self.hidden2tag(lstm_out.view(len(sentence), -1)) tag_scores = F.log_softmax(tag_space) return tag_scores#embedding维度,hidden维度,词语数量,标签数量model = LSTMTagger(EMBEDDING_DIM, HIDDEN_DIM, len(word_to_ix), len(tag_to_ix))#optim中存了各种优化算法loss_function = nn.NLLLoss()optimizer = optim.SGD(model.parameters(), lr=0.1)# See what the scores are before training# Note that element i,j of the output is the score for tag j for word i.inputs = prepare_sequence(training_data[0][0], word_to_ix)tag_scores = model(inputs)print(tag_scores)for epoch in range(300): # again, normally you would NOT do 300 epochs, it is toy data for sentence, tags in training_data: # Step 1. Remember that Pytorch accumulates gradients. # We need to clear them out before each instance model.zero_grad() # Also, we need to clear out the hidden state of the LSTM, # detaching it from its history on the last instance. model.hidden = model.init_hidden() # Step 2. Get our inputs ready for the network, that is, turn them into # Variables of word indices. sentence_in = prepare_sequence(sentence, word_to_ix) targets = prepare_sequence(tags, tag_to_ix) # Step 3. Run our forward pass. tag_scores = model(sentence_in) # Step 4. Compute the loss, gradients, and update the parameters by # calling optimizer.step() loss = loss_function(tag_scores, targets) loss.backward() optimizer.step()# See what the scores are after traininginputs = prepare_sequence(training_data[0][0], word_to_ix)tag_scores = model(inputs)# The sentence is "the dog ate the apple". i,j corresponds to score for tag j# for word i. The predicted tag is the maximum scoring tag.# Here, we can see the predicted sequence below is 0 1 2 0 1# since 0 is index of the maximum value of row 1,# 1 is the index of maximum value of row 2, etc.# Which is DET NOUN VERB DET NOUN, the correct sequence!print(tag_scores) |

以上这篇pytorch+lstm实现的pos示例就是小编分享给大家的全部内容了,希望能给大家一个参考,也希望大家多多支持服务器之家。

原文链接:https://blog.csdn.net/say_c_box/article/details/78802770