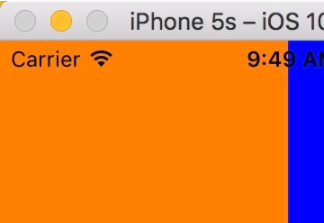

iOS 10 出来之后,我们开发者也可以使用类似Siri的功能。。让我们来看看怎么使用吧,其实他是使用Siri里面的一个语音识别框架Speech framework。 让我们来看看 一些 主要的代码吧。 我们需要一个 UITextView 和 UIButton 就 能体现了。

第一步:定义属性

|

1

2

3

4

5

6

7

8

|

@interface ViewController () <SFSpeechRecognizerDelegate>@property (strong, nonatomic) UIButton *siriBtu;@property (strong, nonatomic) UITextView *siriTextView;@property (strong, nonatomic) SFSpeechRecognitionTask *recognitionTask;@property (strong, nonatomic)SFSpeechRecognizer *speechRecognizer;@property (strong, nonatomic) SFSpeechAudioBufferRecognitionRequest *recognitionRequest;@property (strong, nonatomic)AVAudioEngine *audioEngine;@end |

第二步:进行语音识别检测

|

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

|

- (void)viewDidLoad {[super viewDidLoad];NSLocale *cale = [[NSLocale alloc]initWithLocaleIdentifier:@"zh-CN"];self.speechRecognizer = [[SFSpeechRecognizer alloc]initWithLocale:cale];self.siriBtu.enabled = false;_speechRecognizer.delegate = self;[SFSpeechRecognizer requestAuthorization:^(SFSpeechRecognizerAuthorizationStatus status) {bool isButtonEnabled = false;switch (status) {case SFSpeechRecognizerAuthorizationStatusAuthorized:isButtonEnabled = true;NSLog(@"可以语音识别");break;case SFSpeechRecognizerAuthorizationStatusDenied:isButtonEnabled = false;NSLog(@"用户被拒绝访问语音识别");break;case SFSpeechRecognizerAuthorizationStatusRestricted:isButtonEnabled = false;NSLog(@"不能在该设备上进行语音识别");break;case SFSpeechRecognizerAuthorizationStatusNotDetermined:isButtonEnabled = false;NSLog(@"没有授权语音识别");break;default:break;}self.siriBtu.enabled = isButtonEnabled;}];self.audioEngine = [[AVAudioEngine alloc]init];} |

第三步:按钮的点击事件

|

1

2

3

4

5

6

7

8

9

10

|

- (void)microphoneTap:(UIButton *)sender {if ([self.audioEngine isRunning]) {[self.audioEngine stop];[self.recognitionRequest endAudio];self.siriBtu.enabled = YES;[self.siriBtu setTitle:@"开始录制" forState:UIControlStateNormal];}else{[self startRecording];[self.siriBtu setTitle:@"停止录制" forState:UIControlStateNormal];}} |

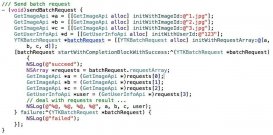

第四步 :开始录制语音,以及将语音转为文字

|

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

|

-(void)startRecording{if (self.recognitionTask) {[self.recognitionTask cancel];self.recognitionTask = nil;}AVAudioSession *audioSession = [AVAudioSession sharedInstance];bool audioBool = [audioSession setCategory:AVAudioSessionCategoryRecord error:nil];bool audioBool1= [audioSession setMode:AVAudioSessionModeMeasurement error:nil];bool audioBool2= [audioSession setActive:true withOptions:AVAudioSessionSetActiveOptionNotifyOthersOnDeactivation error:nil];if (audioBool || audioBool1|| audioBool2) {NSLog(@"可以使用");}else{NSLog(@"这里说明有的功能不支持");}self.recognitionRequest = [[SFSpeechAudioBufferRecognitionRequest alloc]init];AVAudioInputNode *inputNode = self.audioEngine.inputNode;SFSpeechAudioBufferRecognitionRequest *recognitionRequest;self.recognitionRequest.shouldReportPartialResults = true;self.recognitionTask = [self.speechRecognizer recognitionTaskWithRequest:self.recognitionRequest resultHandler:^(SFSpeechRecognitionResult * _Nullable result, NSError * _Nullable error) {bool isFinal = false;if (result) {self.siriTextView.text = [[result bestTranscription] formattedString];isFinal = [result isFinal];}if (error || isFinal) {[self.audioEngine stop];[inputNode removeTapOnBus:0];self.recognitionRequest = nil;self.recognitionTask = nil;self.siriBtu.enabled = true;}}];AVAudioFormat *recordingFormat = [inputNode outputFormatForBus:0];[inputNode installTapOnBus:0 bufferSize:1024 format:recordingFormat block:^(AVAudioPCMBuffer * _Nonnull buffer, AVAudioTime * _Nonnull when) {[self.recognitionRequest appendAudioPCMBuffer:buffer];}];[self.audioEngine prepare];bool audioEngineBool = [self.audioEngine startAndReturnError:nil];NSLog(@"%d",audioEngineBool);self.siriTextView.text = @"我是小冰!

延伸 · 阅读

精彩推荐

|