最近弄爬虫,遇到的一个问题就是如何使用post方法模拟登陆爬取网页。

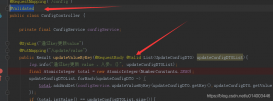

下面是极简版的代码:

|

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73

|

import java.io.BufferedReader;import java.io.InputStreamReader;import java.io.OutputStreamWriter;import java.io.PrintWriter;import java.net.HttpURLConnection;import java.net.URL;import java.util.HashMap;public class test { //post请求地址 private static final String POST_URL = ""; //模拟谷歌浏览器请求 private static final String USER_AGENT = ""; //用账号登录某网站后 请求POST_URL链接获取cookie private static final String COOKIE = ""; //用账号登录某网站后 请求POST_URL链接获取数据包 private static final String REQUEST_DATA = ""; public static void main(String[] args) throws Exception { HashMap<String, String> map = postCapture(REQUEST_DATA); String responseCode = map.get("responseCode"); String value = map.get("value"); while(!responseCode.equals("200")){ map = postCapture(REQUEST_DATA); responseCode = map.get("responseCode"); value = map.get("value"); } //打印爬取结果 System.out.println(value); } private static HashMap<String, String> postCapture(String requestData) throws Exception{ HashMap<String, String> map = new HashMap<>(); URL url = new URL(POST_URL); HttpURLConnection httpConn = (HttpURLConnection) url.openConnection(); httpConn.setDoInput(true); // 设置输入流采用字节流 httpConn.setDoOutput(true); // 设置输出流采用字节流 httpConn.setUseCaches(false); //设置缓存 httpConn.setRequestMethod("POST");//POST请求 httpConn.setRequestProperty("User-Agent", USER_AGENT); httpConn.setRequestProperty("Cookie", COOKIE); PrintWriter out = new PrintWriter(new OutputStreamWriter(httpConn.getOutputStream(), "UTF-8")); out.println(requestData); out.close(); int responseCode = httpConn.getResponseCode(); StringBuffer buffer = new StringBuffer(); if (responseCode == 200) { BufferedReader reader = new BufferedReader(new InputStreamReader(httpConn.getInputStream(), "UTF-8")); String line = null; while ((line = reader.readLine()) != null) { buffer.append(line); } reader.close(); httpConn.disconnect(); } map.put("responseCode", new Integer(responseCode).toString()); map.put("value", buffer.toString()); return map; }} |

以上这篇使用Post方法模拟登陆爬取网页的实现方法就是小编分享给大家的全部内容了,希望能给大家一个参考,也希望大家多多支持服务器之家。